The object detection techniques described in the previous post work fairly well in a wide range of situations except when our robot is standing right in front of the field border. Field borders are ~10cm high white “walls” and when one of those is directly in front of the robot, the effect is that the whole lower part of the camera image is basically white. This confuses our border locator and everything beyond the wall starts getting recognized as being actually within the playing field.

Instead of improving the border locator to deal with that, we added a simple “wall proximity detector” module, which would recognize the situation described above and report it in a binary flag. The information about such a “wall proximity” condition is then accounted for in the ball- and goal detector modules (there is basically a condition which says “if we bumped into the wall then everything further away than 10-20cm is not interesting”).

In addition, the “wall proximity flag” is used in the behaviour algorithm – getting stuck bumping into the wall is certainly a condition that we want to avoid.

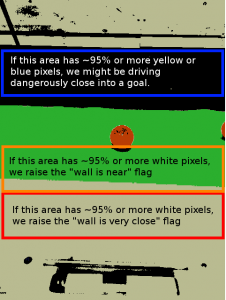

Detecting the wall proximity condition is fast and easy. To perform it, we simply need to make sure that a certain fixed region of the image is nearly all white. We do that by sampling 100 pixels from that region randomly and if 95 or more of them are white we declare that we are probably bumping into a wall.

In fact, we perform this procedure for three different regions – the “very close” region at the bottom of the frame, the “fairly close” region above it, and a third region yet higher above, where we look for yellow or blue pixels – this lets us detect a situation where we are in danger of driving directly into a goal (which would be a violation of Robotex rules).

The vertical position of the three detection regions depends slightly on the angle of the smartphone. To account for that, we have a simple calibration routine, which detects the vertical coordinate at which our robot’s front edge is located on the image and adjusts the region positions accordingly.

It must be said that, despite the simplicity of the approach, it has been an enormously useful solution. Most of our initial attempts of implementing smart steering (i.e. avoiding getting stuck near the wall or driving into a goal) were often either inefficient or prone to errors in borderline cases. The simple idea of making the robot “get scared” of seeing large regions of white in front of him, however, tackled most of the complicated cases unexpectedly well.

As usual, I’ll finish with a sample of our actual code:

class WallProximityDetector {

public:

WallProximityDetector(const CMVProcessor& cmvision);

// Loads/saves/calibrates the vertical offset

void load(QString filename);

void save(QString filename) const;

void calibrateFromFrame();

void init();

void processFrame(); // Perform proximity detection

void paint(QPainter* painter) const; // Debug paint

// The three conditions that are recognized in processFrame

bool wallClose;

bool wallVeryClose;

bool goalClose;

protected:

// ... omitted ...

}