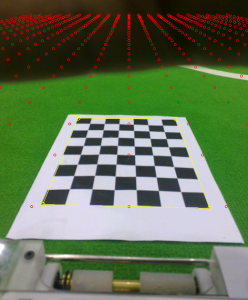

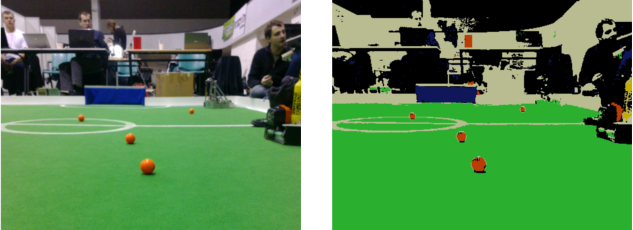

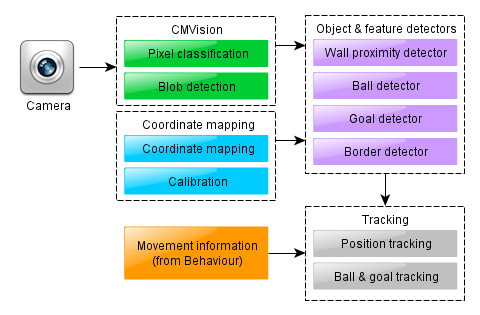

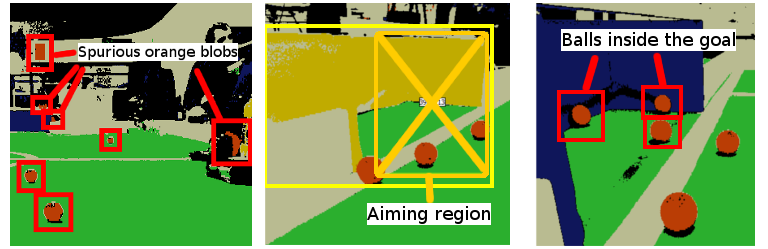

The pixel classifier/blob detector and the coordinate mapper modules, described in the previous posts establish a solid base for solving the central task of our vision processing system – object recognition. Due to the color-coding, a large part of this task is already solved by the blob detector. Indeed, every blob of orange color should be regarded as a candidate ball and every blob of blue or yellow – a candidate goal. Two things remain to be done: 1) we need to filter out “spurious” blobs (i.e. those that do not correspond to balls or goals) and 2) we need to extract useful features such as the position of the ball(s) or location of the goalposts.

Finding actual balls and goals among the candidates

So how do we discern a “spurious” orange blob from the one corresponding to a ball. Most contemporary state of the art computer vision methods, which deal with the task of classifying objects on an image, approach the problem by first extracting a number of features and then making the decision based on those. The features can be anything ranging from “average color” to “is this pixel a corner” to “whether there is a green stain in the vicinity”. Interestingly, it is believed that human visual perception works in a similar manner – by first extracting simple local features from the picture and then combining them in order to detect complex patterns. By observing the picture on the right, for example, one can get convinced that the existence of the four local “corner” features on an image is sufficient to strongly mislead the brain into believing that a complete square is present there.

The choice of appropriately selected features is therefore of paramount importance for achieving accurate object recognition. The task is complicated by the fact that we want the computation to be fast. The problem of finding a set of easy to compute features, which are, at the same time, sufficiently informative for recognizing Robotex balls and goals is highly specific to Robotex. Hence, there are no nice and easy “general”, reusable solutions. Those have to be found in a somewhat ad-hoc manner, by taking pictures of the actual balls and goals at various angles and experimenting with different options until a suitable accuracy is achieved. In fact, each of the Tartu teams came up with their own, slightly different take on the object recognition problem and all of them seemed to work well enough. In the following we present our team’s approach and this should be regarded as an example of one of many equivalently reasonable possibilities. In no way is it optimal, but it did well enough in most practical situations and at the competition.

Features for object recognition

For both ball and goal recognition (in other words, for filtering spurious blobs) we used four kinds of features (or filters): blob area, coordinate mapping, neighboring pixels and border detection. Let’s discuss those in order:

Blob area and size provides a simple criteria to filter out unreasonably small or large blobs. For the case of goal detection it is especially relevant, as we know that even when seen from far away and covered by an opponent, the goal must still be visible as a fairly large piece of color. Also, at the very end of our filtering procedure, if we still had several “candidate goals”, we would retain just the largest of the two.

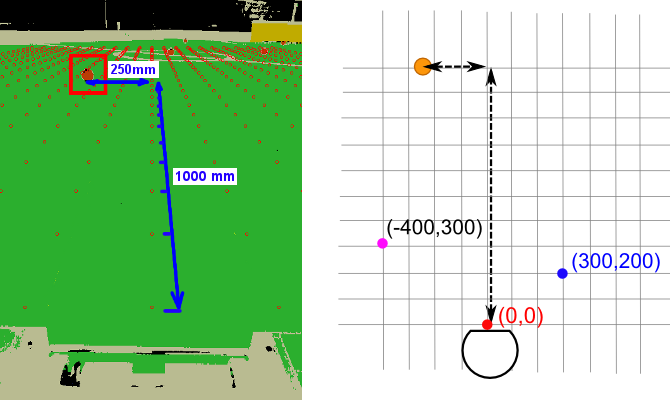

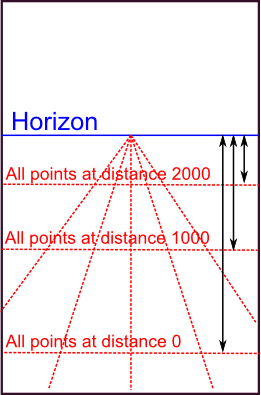

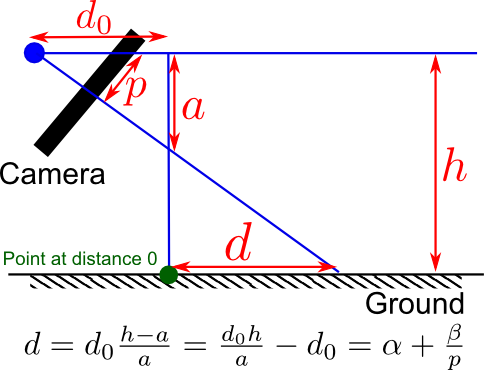

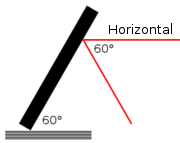

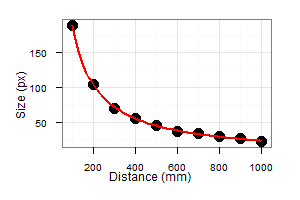

Coordinate mapping is the second most obvious technique. We map the blob’s lower central pixel coordinates using our coordinate mapper to detect its hypothetical coordinates in the real world. If those are not within the playing field, we can safely discard the blob as a false positive. An additional useful test is to map the width and the height of the blob to its corresponding world dimensions. If those do not match the expected size, discard the blob. For example, a ball should not be larger than 10cm in height, even accounting for coordinate mapping errors, and a goal may not be smaller than 5 cm, etc.

The two filters above will do a fairly good job of hiding most of the irrelevant blobs, yet they will be helpless if a feature of an opponent’s robot (such as a red LED or a motor) resembles an actual tiny ball. A nice way for filtering those cases is looking at neighboring pixels. We simply count the proportion of non-green and non-white pixels directly around an orange blob (to be more precise, around the rectangle, encompassing it). If this proportion is suspiciously low, we conclude that this can’t be a valid playing ball.

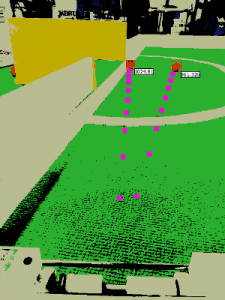

Finally, we must still be able to filter out spurious ball- and goal-like objects lying outside the field boundaries. A method that we call “border locator” turned out invaluable here. Our border locator procedure starts at the pixel with the world coordinates (0, 0), that is, directly in front of the robot, and then moves in the direction of an object of interest (e.g. a candidate ball), by making steps of a fixed length (e.g. 8cm in world coordinates) and probing the corresponding pixels. If 5 of those pixels in a row happen to be non-green before the target pixel is reached, we conclude that the edge of the field is separating us from the object. The procedure is not perfect – if we are looking at a ball directly along a white line, the border locator will report it to be “outside the borders”. However, such situations are rare enough so we ignored those.

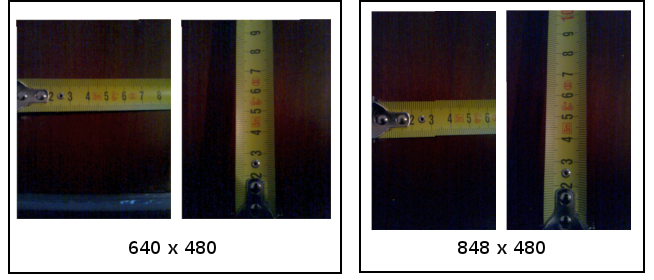

Note that all of the checks described above are very efficient. Indeed, the blob area and coordinate mapping checks require essentially around ten or so comparisons. The scan of neighboring pixels is also fast, because it suffices to check at most a 100 pixels or so, spread out equally along the border. Finally, in the border location procedure with a step size of 8cm we are guaranteed to either reach the object or hit a white border in approximately 5m/0.08 = 62 steps. Consequently, we spend at most 200 steps in total to analyze each candidate blob – way less, typically. Comparing to a single pass over the whole image (which requires examining 640×480 = 307 000 pixels) this is nothing.

Extracting useful features

After we have filtered out the spurious blobs, we do some post-processing. For the balls, we do the following:

- Check whether at least 75% of the neighboring pixels are white. If so, we mark the ball as being probably “near a wall”. Grabbing such balls is more complicated than others, so our algorithm ignores those in the beginning of the game.

- Check whether the ball’s blob lies within a goal’s blob. If so, we mark the ball as being “in the goal”, which means we do not need to bother about it. This is not a very precise criteria, of course, but it works surprisingly well in 99% of cases.

- We also label the blob as being a potential group of several balls (rather than just one ball) if its dimensions suggest so. This is, however, an imprecise test, which was never ever needed in practice.

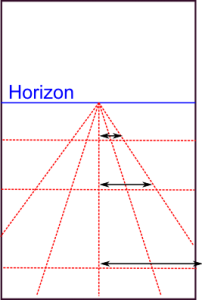

For the goals, we have to detect the situation where we see the goal at an angle, which means our aiming region can not be bluntly chosen to be the whole blob (see figure above). This particular task has been on of the trickiest of the whole vision processing and after trying numerous (fairly involved) approaches we ended up with the following unexpectedly stupid yet fast and working method:

- Split the rectangle encompassing the putative goal into two halves, left and right.

- Sample 200 pixels randomly from each half and count how many of those are green.

- If the proportion of green pixels in one of the halves is greater than in the other, presume we are viewing the goal at an angle and have to aim at the “greener” half.

Summary and code

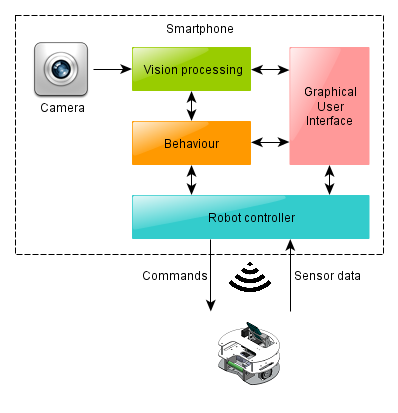

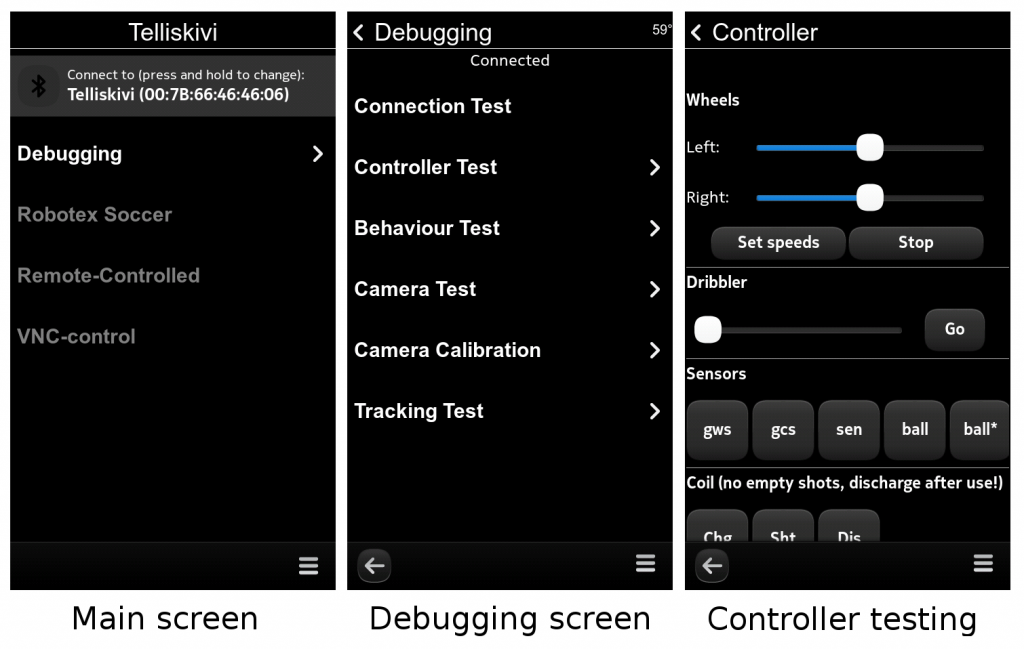

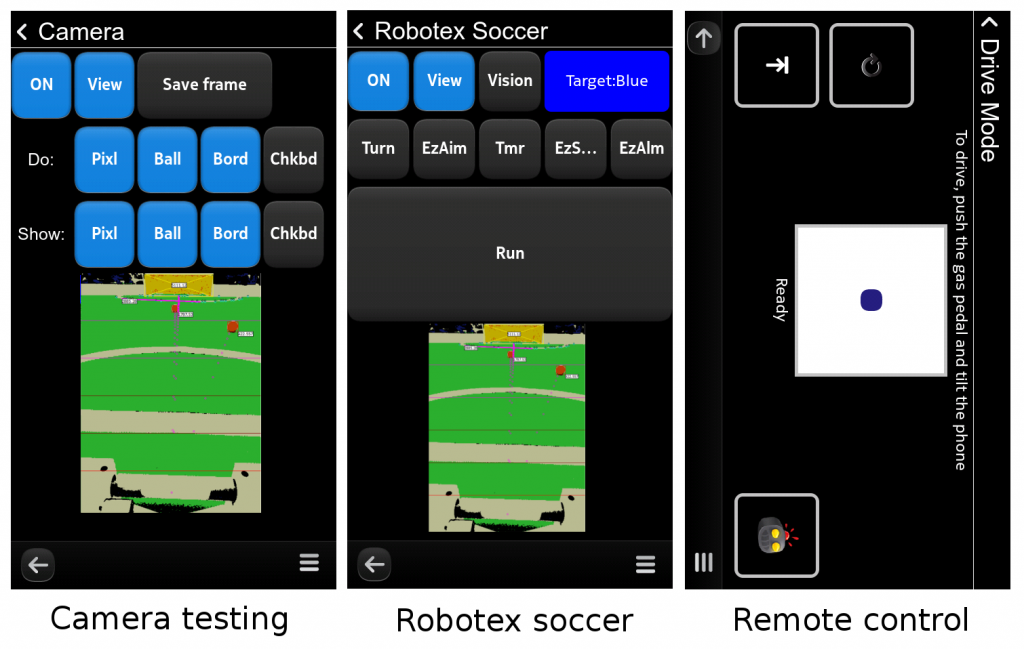

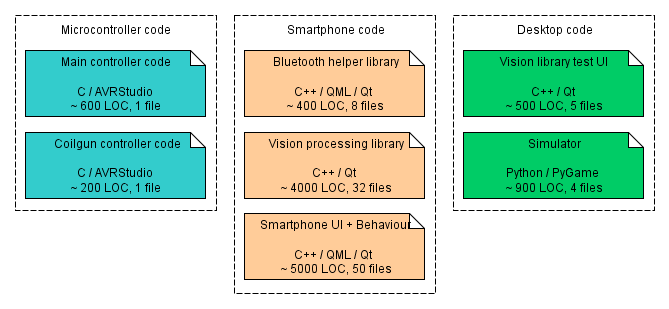

The modules of our vision system that we have just discussed actually look as follows:

class BallDetector {

public:

// Construct a Ball detector object, providing references

// to all the necessary components

BallDetector(const CMVProcessor& cmv,

const CoordinateMapper& cmapper,

const GoalDetector& goalDetector,

const WallProximityDetector& wallProximity);

void processFrame(); // Extract visible balls from frame

void paint(QPainter* painter) const; // Paint for debugging

QList<Ball> balls; // Detected balls

protected:

// Main filtering method:

// Given a blob rectangle, checks whether it contains a ball

Ball checkBall(const QRect& rect, int area) const;

// ... some details omitted ... //

};

class GoalDetector {

public:

GoalDetector(const CMVProcessor& cmv,

const CoordinateMapper& cmapper,

const WallProximityDetector& wallProximity);

void processFrame(); // Extract visible goals

void paint(QPainter* painter) const; // Paint for debugging

Goal yellow; // Detected yellow goal (if any)

Goal blue; // Detected blue goal (if any)

protected:

// Main method:

// Given a rect, checks whether it is a valid goal

Goal checkGoal(const QRect& rect, bool yellow, int area);

// ... some details omitted ... //

};

class BorderLocator {

public:

BorderLocator(const CMVProcessor& cmv,

const CoordinateMapper& cmapper);

// Shoots a probe at a specific point in world coordinates

// Returns a BorderProbe object with shot results

BorderProbe shootAtPoint(QPointF worldPoint) const;

protected:

// ... omitted ... //

};

An attentive reader will notice the WallProximityDetector module mentioned in the code. We shall come to it in a later post.